In the rapidly evolving field of artificial intelligence, the quest for efficiency is unending. A recent paper titled “ReWOO: Decoupling Reasoning from Observations for Efficient Augmented Language Models” by Binfeng Xu and his team has made a significant stride in this direction. The paper introduces a novel paradigm, ReWOO (Reasoning WithOut Observation), that aims to enhance the efficiency of Augmented Language Models (ALMs) by decoupling the reasoning process from external observations.

The Problem with Current ALMs

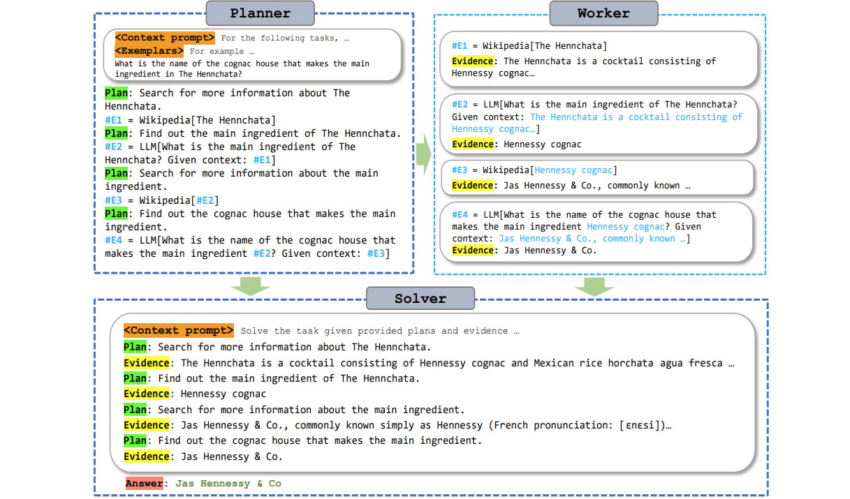

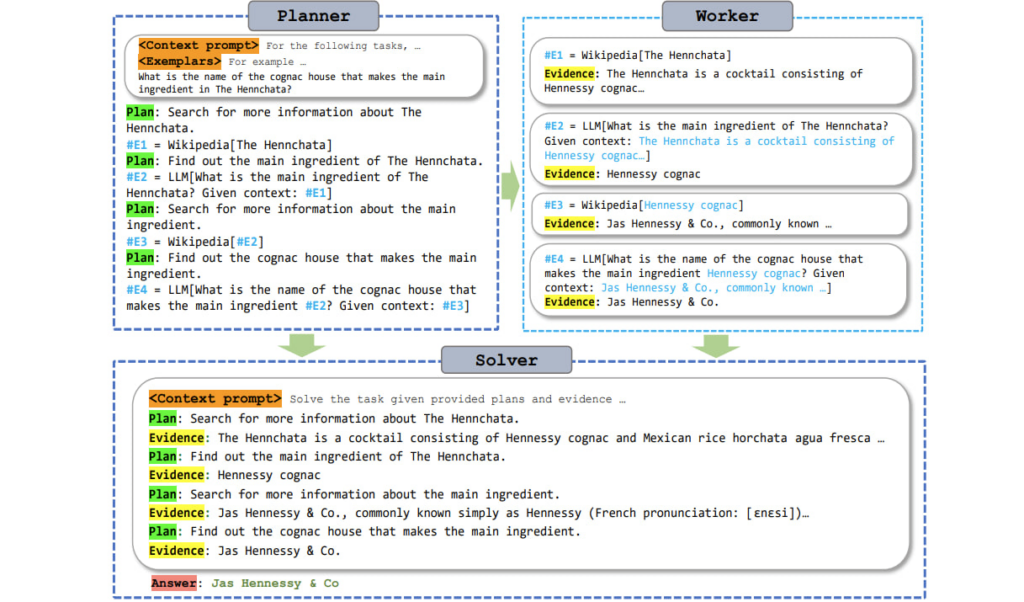

ALMs blend the reasoning capabilities of Large Language Models (LLMs) with tools that allow for knowledge retrieval and action execution. However, the existing ALM systems trigger LLM thought processes while pulling observations from these tools in an interleaved fashion. This approach, although straightforward and easy to implement, often leads to substantial computational complexity due to redundant prompts and repeated execution.

The ReWOO Solution

ReWOO addresses these challenges by detaching the reasoning process from external observations, significantly reducing token consumption. The system’s comprehensive evaluations across six public NLP benchmarks and a curated dataset reveal consistent performance enhancements with the proposed methodology. Notably, ReWOO achieves 5× token efficiency and a 4% accuracy improvement on HotpotQA, a multi-step reasoning benchmark.

Robustness and Scalability

Beyond prompt efficiency, ReWOO also demonstrates robustness under tool-failure scenarios. The decoupling of parametric modules from non-parametric tool calls enables instruction fine-tuning to offload LLMs into smaller language models, substantially reducing model parameters. The team’s illustrative work offloads reasoning ability from a 175B GPT3.5 model into a 7B LLaMA, demonstrating the significant potential for truly efficient and scalable ALM systems.

Conclusion

The ReWOO paradigm represents a significant step forward in the quest for more efficient ALMs. By decoupling reasoning from tool feedback and observations, ReWOO substantially reduces prompting redundancy in prevailing Thought-Action-Observation ALM systems. The system’s superior performance in achieving boosted performance with much less token consumption, coupled with its robustness under tool-failure cases, lays a solid foundation for future advancements in the field.

For those interested in exploring this further, the full code, model, and data have been released for reproduction on the project’s GitHub repository.

The ReWOO project is a testament to the power of innovation in artificial intelligence and a beacon for future research in the field. It’s a clear demonstration that with the right approach, we can inch closer to truly scalable Artificial General Intelligence (AGI).